By the time you read this, the UK may, or may not, have obtained some clarity over its future outside of, or still inside, the European Union. However, whatever the outcome of the General Election, it’s not immediately obvious how a result for the right or left will actually provide real direction and certainty in the foreseeable future. Leaving Europe still requires the negotiation of what would seem to be a massively complicated trade agreement. Remaining in Europe would appear to require another referendum, so months of further delay.

For many businesses contemplating a digital future, a similar lack of confidence seems to be the order of the day. Rushing headlong to embrace the ‘amazing’ potential of the various technologies which underpin digital transformation may well be fraught with as many pitfalls as benefits – not least the fact that many of these technologies (like a final Brexit deal) require quite some time before they are either easily available and affordable (ie 5G).

Where remain does remain an option for the UK, keeping the same IT infrastructure and expecting to remain competitive is not an option for almost all businesses. That said, a political remain deal will almost certainly come with some fundamental changes either between the UK and the rest of the EU countries and/or some changes for all within the EU community. In other words, whether it’s politics or technology, doing nothing is impossible, but what to do is not that easy to determine.

2020 will see some of the new technologies and ideas begin to gain significant traction, but, sorry to be a doom and gloom merchant, it will probably be characterised by continuing confusion for many, if not all businesses, who know they need to do something, but are not sure quite what, where or when.

All I can say is, make sure you do your homework before you do make changes, and, as with the politicians, don’t believe the hype or fake news!

Happy Christmas to one and all.

New study finds that 74% of European organisations are struggling to introduce digital tools and practices to improve decision making.

The majority (74 per cent) of businesses across the UK and Europe are still facing major obstacles trying to introduce new digital tools and practices to support their decision making, according to a new report.

The primary barrier, according to 57 per cent of European C-Suite leaders, is data fragmentation. Siloed data results in a lack of visibility across business processes, and in turn a poor decision-making culture.

These difficulties are compounded by the findings that 65 per cent of leaders feel significant pressure to deliver a successful digital transformation strategy. They need to be able to act and react fast enough to dynamic market conditions and innovation opportunities – and open themselves up to critical risks.

In partnership with IDC, Domo, a business to business cloud software company - polled 375 employees across multiple industries to understand how they are using modern digital technologies to improve and help deliver value across business.

The study also found that C-Suite leaders find it difficult to create a holistic view of their business. This restricts leaders in taking an integrated approach to digital transformation, as they struggle to connect the dots between varying stakeholders. Key barriers include:

“Leaders are facing unprecedented amounts of pressure from technological, social and regulatory forces. It’s no longer about driving digital transformation in vertical stacks, but rather horizontally across the entire enterprise,” said Ian Tickle, Senior Vice President and General Manager for EMEA at Domo. “However, when data is stuck in silos across the business it hinders new and efficient ways of working, and ultimately stalls digital transformation”.

The research predicts that by 2029, 75 per cent of European organisations will be completely digitally transformed or what IDC defines as a ‘Digital Native Enterprise’. It is clear, however, that in order to achieve this the right processes need to be put in place - plans and predictions can’t happen without effective business decision-making.

Most firms have not fully embraced digitisation of business processes, products and services. For example, only 4 per cent of ‘digital laggards’, have integrated tools such as Domo to harness the power of their data and accelerate time-to-value. Compared to 67 per cent of ‘digital leaders’ who, as a result are able to react quickly and accurately enough to cope with the dynamic market conditions.

Tickle summarises: “True Digital Transformation isn’t just about creating a ‘digital outside’ to your organisation: leaders must consider every business element, investing in reinventing their data, expertise and workflows to create advantage. Having effective business decision-making tools that harness all the data available is the key foundational step to advance any digital transformation journey.”

Human-machine collaboration the new normal for successful businesses

Researchers find that organisations need content intelligence to advance digital transformation.

New survey from leading research firm IDC has revealed the true extent of software robots supporting humans in the workplace. The IDC whitepaper, Content Intelligence for the Future of Work, sponsored by ABBYY, indicates that the contribution of software robots, or digital workers, to the global workforce will increase by over 50% in the next two years. These results, from a survey of 500 senior decision-makers in large enterprises, illustrate a fundamental shift to a future of work dependent on human-machine collaboration.

“A growing number of employees will find themselves working side-by-side with a digital coworker in the future as technology automates many work activities,” commented Holly Muscolino, Research Vice President of Content and Process Strategies and the Future of Work at IDC. “Think human and machine. The human-machine collaboration is not just the future of work, but it is the new normal for today's high-performing enterprises.”

It is not just mundane, repetitive jobs like data input that new digital colleagues will help human workers complete in the years ahead. The growth of machine learning (ML) through human-centric artificial intelligence (AI) means robot assistants will also help employees make better decisions. In most cases, these technologies enhance rather than replace human capabilities. For example, the survey found that technology evaluating information will grow by 28% in two years, and 18% of activities related to reasoning and decision making will be performed by machines.

IDC forecasts that the intelligent process automation (IPA) software market, which includes content intelligence and robotic process automation (RPA), will grow from $13.1 billion in 2019 to $20.7 billion in 2023. Since many of the repetitive processes and tasks that are well suited for automation by RPA are document and content centric, content intelligence technologies frequently go hand-in-hand with RPA in intelligent process automation use cases. Automation initiatives will also be enabled by process intelligence, a new generation of process mining tools providing complete visibility into business processes – the critical foresight needed to improve the success of an IPA project.

Over 40% of survey respondents have experienced a notable increase in customer satisfaction and employee productivity by deploying content intelligence technologies into their digital transformation strategy. Additionally, more than 1/3 of respondents saw an improvement in responsiveness to customers, new product or revenue opportunities, increased visibility and/or accountability, or increased customer engagement.

“The IDC survey proves that automation can and should be human-centric, augmented with artificial intelligence,” said Neil Murphy, VP Global Business Development at ABBYY. “Ethical, responsible automation will create a more productive, happier future where human workers can focus on higher-level, creative and socially responsible tasks, and customers get better experiences with faster service. Businesses that are early-adopters of incorporating content intelligence within their automation platforms will gain a significant competitive edge.”

Other key findings:

Manufacturers plan to invest more than ever in smart factories but challenges in scaling must be overcome.

A new study from the Capgemini Research Institute has found that smart factories could add at least $1.5 trillion to the global economy through productivity gains, improvements in quality and market share, along with customer services. However, two-thirds of this overall value is still to be realized: efficiency by design and operational excellence through closed- loopoperations will make equal contributions. According to the new research, China, Germany and Japan are the top three countries in smart factory adoption, closely followed by South Korea, United States and France.

The report entitled, “Smart Factories @ Scale”, identified the two main challenges to scaling up: the IT-OT convergence and the range of skills and capabilities required to drive the transformation including cross-functional capabilities and soft skills in addition to digital talent. The report also highlights how the technology led-disruption, towards an ‘Intelligent Industry’, is an opportunity for manufacturers striving to find new ways to create business value, optimize their operations and innovate for a sustainable future.

Key findings of the study, which surveyed over 1000 industrial company executives across 13 countries, include:

Organizations are showing an increasing appetite and aptitude for smart factories:compared to two years ago, more organizations are progressing with their smart initiatives today and one-third of factories have already been transformed into smart facilities. Manufacturers now plan to create 40% more smart factories in the next five years and increase their annual investments by 1.7x compared to the last three years.

The potential value add from smart factories is bigger than ever: based on this potential for growth, Capgemini estimates that smart factories can add anywhere between $1.5 trillion to $2.2 trillion to the global economy over the next five years. In 2017 Capgemini found that 43% of organizations had ongoing smart factory projects; which has shown a promising increase to 68% in two years. 5G is set to become a key enabler as its features would provide manufacturers the opportunity to introduce or enhance a variety of real-time and highly reliable applications.

Scaling up is the next challenge for Industry 4.0: despite this positive outlook, manufacturers say success is hard to come by, with just 14% characterizing their existing initiatives as ‘successful’ and nearly 60% of organizations saying that they are struggling to scale. The two main challenges to scale up are:

·The IT-OT convergence - including digital platforms deployment and integration, data readiness and cybersecurity - which will be critical to ensure digital continuity and enable collaboration. Agnostic and secure multilayer architectures will allow a progressive convergence.

·In addition to digital talent, a range of skills and capabilities will be required to drive smart factory transformation including cross-functional profiles, such as engineering-manufacturing, manufacturing-maintenance, and safety-security. While soft skills, such as problem solving and collaborative skills will also be critical.

According to the report, organizations need to learn from high performers (10% of the total sample) that make significant investments in the foundations - digital platforms, data readiness, cybersecurity, talent, governance - and well-balanced “efficiency by design” and “effectiveness in operations” approach, leveraging the power of data and collaboration.

Jean-Pierre Petit, Director of Digital Manufacturing at Capgemini said: “A factory is a complex and living ecosystem where production systems efficiency is the next frontier rather than labor productivity. Secure data, real- time interactions and virtual-physical loopbacks will make the difference. To unlock the promise of the smart factory, organizations need to design and implement a strong governance program and develop a culture of data-driven operations.”

“The move to an Intelligent Industry is a strategic opportunity for global manufacturers to leverage the convergence of Information Technology and Operational Technology, in order to change the way their industries will operate and be future ready,” he further added.

Mourad Tamoud, EVP, Global Supply Chain Operations at Schneider Electric said: “Through Schneider Electric’s TSC4.0 Transformation, Tailored, Sustainable & Connected 4.0, a sustainable and connected journey which integrates the Smart Factory initiative, we have created a tremendous dynamic. We had started with just 1 flagship pilot several years ago and towards the end of 2019, we have over 70 Smart Factory sites certified with external recognition by the World Economic Forum. By training our managers, engineers, support staff, and operators, we have equipped them with the right knowledge and competences. In parallel, we have also started to scale this experience across the organization through a virtual network to achieve such a fast ramp up. “

“This is only the beginning - we will continue to innovate by leveraging internally and externally our EcoStruxure™ solution - an IoT enabled, plug and play, open architecture and platform - and use the latest best practices in the digital world,” he further added.

Two thirds (67%) of businesses say that driving collaboration between security and IT ops teams is a major challenge.

Research released by Tanium and conducted by Forrester Consultinghas found strained relationships between security and IT ops teams leave businesses vulnerable to disruption, even with increased spending on IT security and management tools.

According to the study of more than 400 IT leaders at large enterprises, 67% of businesses say that driving collaboration between security and IT ops teams is a major challenge, which not only hampers team relationships, but also leaves organisations open to vulnerabilities. Over 40% of businesses with strained relationships consider maintaining basic IT hygiene more of a challenge than those with good partnerships (32%). In fact, it takes teams with strained relationships nearly two weeks longer to patch IT vulnerabilities than teams with healthy relationships (37 business days versus 27.8 business days).

The study also found that increased investment in IT solutions has not translated to improved visibility of computing devices and has created false confidence among security and IT ops teams in the veracity of their endpoint management data.

Increased investment without improved visibility

In recent years, there has been a considerable investment in security and IT operations tools, as well as an increased focus at the board level on cybersecurity. According to the study, 81% of respondents feel very confident that their senior leadership/board has more focus on IT security, IT operations and compliance than two years ago.

Enterprises who reported budget increases said they have seen considerable additional investment in IT security (18.3%) and operations (10.9%) over the last two years, with teams procuring an average of five new tools over this same time period.

Misplaced confidence leaves firms vulnerable

Despite the increased investment in IT security and operational tools, businesses have a false sense of security regarding how well they can protect their IT environment from threats and disruption. 80% of respondents claimed that they can take action instantly on the results of their vulnerability scans and 89% stated that they could report a breach within 72 hours. However, only half (51%) believe they have full visibility into the vulnerabilities and risks and fewer than half (49%) believe they have visibility of all hardware and software assets in their environment.

The study also showed that 71% of respondents struggle to gain end-to-end visibility of endpoints and their health, which could lead to consequences such as poor IT hygiene, limited agility to secure the business, vulnerability to cyber threats and collaboration between teams.

Chris Hodson, EMEA CISO at Tanium, said: “IT security is increasingly a boardroom-level issue and businesses have accordingly started to invest much more in shoring up their defences. Yet there’s a prevailing misconception that investing in multiple point solutions is the most comprehensive way to prepare for cyberthreats. In fact, quite the opposite is true. Having multiple pieces of cybersecurity software is helping to cement these internal tensions between IT operations and security teams, as well as contributing to this increasingly siloed approach to security, which further leaves the business vulnerable.

“Our research suggests that increased investment in IT solutions has not translated to improved visibility of computing devices and created misplaced confidence among security and IT ops teams. The truth is that companies who rely on a gamut of point solutions, but lack complete visibility of their IT environment, are essentially basing their IT security posture on a coin flip.”

Unified endpoint solutions allows firms to operate at scale

A unified endpoint management and security solution – a common toolset for both security and IT ops – can help address these challenges. In the study, IT decision makers stated that a unified solution would allow enterprises to operate at scale (59 %), decrease vulnerabilities (54 %), and improve communication between security and operations teams (52%).

IT decision makers also say that a unified endpoint solution would help them see faster response times (53%) and have more efficient security investigations (51%), while improving visibility through improved data integration (49%) and accurate real-time data (45%).

According to the Forrester study: “IT leaders today face pressure from all sides. To cope with this pressure, many have invested in a number of point solutions. However, these solutions often operate in silos, straining organisational alignment and inhibiting the visibility and control needed to protect the environment. Using a unified endpoint security solution that centralises device data management enables companies to accelerate operations, enhance security, and drive collaboration between Security and IT ops teams.”

The four largest European colocation FLAP markets of Frankfurt, London, Amsterdam and Paris are set for a record-breaking finish to 2019, with a supercharged last quarter, according to figures from CBRE, the world's leading data centre real estate advisor.

CBRE analysis shows that there was 38MW of take-up and 63MW of new supply across the four markets during Q3. London and Frankfurt were particularly strong on the demand side, and Amsterdam was responsible for nearly 50% of the new supply. Despite this strong performance, CBRE forecasts that market activity in Q4 will double that of Q3 to create a record year.

CBRE forecasts that 70MW of new take-up will be added to the FLAP market total in the final quarter, pushing the full year to beyond 200MW. This would be the first time on record that the four markets have breached 200MW of take-up in a single year.

According to CBRE, a further 150MW of new supply will be brought online in Q4. The new capacity represents nearly 50% of all the new capacity in the four markets during 2019 and will equate to a 23% growth in total market size during the year.

Mitul Patel, Head of EMEA Data Centre Research at CBRE commented:

“These record levels of development underway in the major European markets are creating challenges. The availability of freehold land in popular data centre hubs, which offer proximity to large amounts of HV power and fibre routes, such as Slough in the UK and Schiphol in Amsterdam, is highly constrained.The effects of these barriers to entry are that data centre developers are either choosing to locate in new, sometimes unproven, locations or are competing aggressively on price for land opportunities.

“Despite cloud providers driving market activity, enterprise demand for colocation remains consistent across the major markets. CBRE analysis shows that in the four years from 2016, there has been an average of 43MW of enterprise take-up per year across the four FLAP markets. As enterprise companies continue to utilize colocation footprints as part of their hybrid IT architecture, we expect this to remain consistent.”

Global study reveals rising customer expectations are outpacing adoption of AI tools that drive efficiency and customer experience.

LogMeIn has published the results of a global study conducted in partnership with Ovum to understand how support agents are faring in the age of ever-rising consumer expectations. The findings reveal that the vast majority of surveyed agents believe that the technology tools provided to customer-facing employees are not evolving as quickly as their needs are.

Today’s customers expect agents to have increasingly detailed knowledge of products, services, and company policies so they can achieve first contact resolution (FCR). However, the reality is that only 35% of agents say this is possible, as the majority (57%) do not have any AI tools, and more than half (53%) do not use a knowledge base.

The study surveyed 750 customer-facing employees, customer experience (CX) managers, and content managers in seven countries across North America, EMEA and APAC, and found that agents in physical locations are worse off than their counterparts in contact centres. Only 30% of field agents have AI tools compared to 44% in contact centres, and the unpleasant result for customers is that 20% of interactions require a call-back and 13% get transferred.

“We know that there is a direct correlation between agent frustration and customer discontent, and 85% of customer-facing employees expressed a very high degree of frustration because they can’t meet customer expectations,” said Ken Landoline, Principal Analyst, Ovum. “As the study highlights, all customer support employees need to be better equipped to meet rapidly growing customer expectations. Employees want to step up but are hampered by mediocre training and outdated, inefficient tools. Clearly this needs to change, or customer loyalty and revenue will ultimately suffer.”

AI’s Untapped Potential

AI-powered knowledge management tools offer a powerful solution to customer-facing employees working inside or outside of the contact centre who need instant access to company information, and 56% of surveyed knowledge base users are either extremely or very satisfied with them. Knowledge bases reduce the amount of time it takes to find information (66% think they are easy to search) and serve as a single source of truth for employees across teams and departments. LogMeIn, who commissioned the study with Ovum, is already working closely with companies to help reduce internal escalations by up to 30% with Bold360’s Advise solution, which leverages AI-powered knowledge management.

AI deployments for customer service and support also go beyond knowledge management. The majority are for automating routine tasks (60%) and assisting agents in real time (50%), followed by AI for customer journey mapping. Seventy-five percent of agents have a feedback system in place to advise management of issues they are facing during the course of their workday, and one-third utilise automatic pop-ups that recommend helpful next-best actions.

Yet, AI adoption is still in early stages. The majority of managers who participated in the survey are still formulating their AI strategy (38%) or only have an early-phase strategy in place (28%). The dominant approach to implementation is to put ad hoc point solutions in place for a few selected use cases (44%).

“A lack of tools for customer service agents creates a vicious circle: staff can’t meet customer expectations which creates employee frustration, turnover, and of course, a poor customer experience,” said Ryan Lester, Senior Director of Customer Engagement Technologies at LogMeIn. “Even though powerful technologies like AI-based knowledge management tools can reverse the trend, adoption is slow and it’s hurting these organisations. Poor customer experiences have a negative impact on sales and repeat business, so this is a pressing issue that businesses need to address at the highest levels.”

A vast majority of enterprises worldwide have adopted multi-cloud strategies to keep pace with the need for digital transformation and IT efficiency, but they face significant challenges in managing the complexities and added requirements of these new application and data delivery infrastructures, according to a global survey conducted by the Business Performance Innovation (BPI) Network, in partnership with A10 Networks.

The new study, entitled ‘Mapping The Multi-Cloud Enterprise,’ finds that improved security, including centralised security and performance management, multi-cloud visibility of threats and attacks, and security automation, is the number one IT challenge facing companies in these new compute environments.

Among key survey findings:

“Multi-cloud is the de facto new standard for today’s software- and data-driven enterprise,” said Dave Murray, head of thought leadership and research for the BPI Network. “However, our study makes clear that IT and business leaders are struggling with how to reassert the same levels of management, security, visibility and control that existed in past IT models. Particularly in security, our respondents are currently assessing and mapping the platforms, solutions and policies they will need to realise the benefits and reduce the risks associated of their multi-cloud environments.”

“The BPI Network survey underscores a critical desire and requirement for companies to reevaluate their security platforms and architectures in light of multi-cloud proliferation,” said Gunter Reiss, vice president at A10 Networks. “The rise of 5G-enabled edge clouds is expected to be another driver for multi-cloud adoption. A10 believes enterprises must begin to deploy robust Polynimbus security and application delivery models that advance centralised visibility and management and deliver greater security automation across clouds, networks, applications and data.”

The study finds that some 38% of companies have or will reassess their current relationships with security and load balancer suppliers in light of multi-cloud, with most others still undecided about whether a change in vendors is needed.

Benefits and Drivers of Multi-Cloud

IT and business executives respondents point to a number of benefits and business and technology forces that are driving their move into multi-cloud environments.

The top-four drivers for multi-cloud:

The top-four benefits for multi-cloud:

Security Tops IT To-Do List

Respondents report facing a long list of challenges in managing multi-cloud compute environments, with security at the top of their agenda.

The top-four challenges for multi-cloud:

The top-four requirements for improving multi-cloud security and performance:

The top-four security-specific solution needs:

New survey highlights how aligning cloud transformation with core business planning leads to success.

Most organisations worldwide (93%) are migrating to the cloud for critical IT requirements, but nearly a third (30%) say they have failed to realise notable benefits from cloud computing, largely because they have not integrated their adoption plan as a core part of their broader business transformation strategy, according to the first Unisys Cloud Success Barometer™. Surveying 1,000 senior IT and business leaders on the impact and importance of cloud in 13 countries around the world, including the UK, Germany, Belgium, and the Netherlands, the research discovered a strong correlation between cloud success and strategic planning.

Cloud Commitment is Key

The study found that, when cloud transition is core to business strategy, there is a more dramatic improvement in organisational effectiveness – in fact, 83% said that this has improved for the better since moving to the cloud. In contrast, amongst those who say the cloud is a minor part of their business strategy, just 30% say organisational effectiveness has improved since moving to the cloud.

Commitment through investment also produced positive results. Once organisations have experienced the benefits, they then continue to invest further in the cloud. Four-fifths (80%) of those who plan to spend substantially on their cloud computing in 2020 have seen their organisational effectiveness change significantly for the better.

Kevin Turner, Digital Workplace Strategy Lead, Unisys, said: “Our findings show that the majority of organisations are approaching their use of cloud computing from a tactical perspective – and whilst tactical moves can be very powerful – by taking a broader strategic view, and integrating with core business planning, cloud adoption will deliver greater results. Committing to the cloud with a considered approach, ensuring best practice supported by a robust methodology is imperative to leveraging the cloud to meet your objectives.”

The Future is Multi-Cloud

Meanwhile, only 28% of organisations have embraced multi-cloud solutions indicating more opportunity yet to reap business benefits, especially as multi-cloud users see the cloud as essential to staying competitive - 42% have been impacted by a competitor who leverages cloud innovations. By choosing multiple cloud providers, businesses can take advantage of the best parts of each provider’s services and customise these to suit the needs – and expectations – of the organisation. This partial transition could be a potential limit to the benefits of the cloud.

“Multi-cloud represents the future of cloud computing, and for obvious reasons. Organisations that adopt multi-cloud strategies can design applications to run across any public cloud platform, expanding their marketplace power,” said Turner. “Additionally, a multi-cloud strategy helps organisations gain greater sovereignty over their data, spread their risk in case of downtime and increase the business's negotiating leverage – as well as offering cost savings by allowing businesses to shop rates for different service needs from multiple vendors.”

Cloud Pros and Cons

Nearly three in four (73%) of senior business leaders say the benefits of cloud computing outweigh the barriers, and 66% have seen their organisational effectiveness significantly change for the better through the adoption of cloud computing. The top expectations of cloud benefits were; improved security (64%) and reduced costs (50%), higher staff productivity (40%), improved agility to meet demand (40%) and delivering better customer experience (40%).

The majority of business leaders (77%) said migration to the cloud had met or exceeded their expectations for security, improving the supply chain (75%) and driving innovation (74%). The areas that fell short in meeting expectations of our respondents were; improved staff productivity (32%), increased revenue (32%), managing or reducing cost (35%) and reducing headcount (38%).

“Closing the gaps on these business results – including revenues, costs, productivity, innovation and organisational effectiveness – requires more than a ‘lift and shift’ transition of IT applications and infrastructure to the cloud. It requires changing the way companies work to better suit customers and staff, and changing their attitudes to digital innovation,” continued Turner. “Strategic planning along with security, scalability, realistic timelines and upskilling staff are all key to successful cloud implementation.”

Nutanix has announced the findings of its second global Enterprise Cloud Index survey and research report, which measures enterprise progress with adopting private, hybrid and public clouds. The new report found enterprises plan to aggressively shift investment to hybrid cloud architectures, with respondents reporting steady and substantial hybrid deployment plans over the next five years. The vast majority of 2019 survey respondents (85%) selected hybrid cloud as their ideal IT operating model.

For the second consecutive year, Vanson Bourne conducted research on behalf of Nutanix to learn about the state of global enterprise cloud deployments and adoption plans. The researcher surveyed 2,650 IT decision-makers in 24 countries around the world about where they’re running their business applications today, where they plan to run them in the future, what their cloud challenges are, and how their cloud initiatives stack up against other IT projects and priorities. The 2019 respondent base spanned multiple industries, business sizes, and the following geographies: the Americas; Europe, the Middle East, and Africa (EMEA); and the Asia-Pacific (APJ) region.

This year’s report illustrated that creating and executing a cloud strategy has become a multidimensional challenge. At one time, a primary value proposition associated with the public cloud was substantial upfront capex savings. Now, enterprises have discovered that there are other considerations when selecting the best cloud for the business as well, and that one size cloud strategy doesn’t fit all use cases. For example, while applications with unpredictable usage may be best suited to the public clouds offering elastic IT resources, workloads with more predictable characteristics can often run on-premises at a lower cost than public cloud. Savings are also dependent on businesses’ ability to match each application to the appropriate cloud service and pricing tier, and to remain diligent about regularly reviewing service plans and fees, which change frequently.

In this ever-changing environment, flexibility is essential, and a hybrid cloud provides this choice. Other key findings from the report include:

“As organisations continue to grapple with complex digital transformation initiatives, flexibility and security are critical components to enable seamless and reliable cloud adoption,” said Wendy M. Pfeiffer, CIO of Nutanix. “The enterprise has progressed in its understanding and adoption of hybrid cloud, but there is still work to do when it comes to reaping all of its benefits. In the next few years, we’ll see businesses rethinking how to best utilise hybrid cloud, including hiring for hybrid computing skills and reskilling IT teams to keep up with emerging technologies.”

Datrium has released findings from its industry report on the State of Enterprise Data Resiliency and Disaster Recovery 2019, which assesses how organizations are implementing disaster recovery (DR) to protect their data from attack or disaster.

Findings suggest that organizations are growing more concerned about the threat of disaster as a result of ransomware, human error, power failure and natural disaster. The heightened threat of ransomware is particularly concerning for the enterprise data centre, with nearly 90% of companies considering ransomware a critical threat to their business, and this is driving the need for DR. The research also found that the public cloud is increasingly being considered as a DR site. The cloud offers greater ease of use and cost-efficient DR, solving several pain points that are holding organizations back from responding to DR events including the complexity of DR products and processes as well as high associated costs.

The State of Enterprise Data Resiliency and Disaster Recovery 2019 study was developed to identify what DR solutions businesses currently utilize, their confidence in those solutions and how effective the solutions are at helping businesses return to normal operations following a disaster, in addition to the key capabilities IT teams consider when evaluating and selecting a DR solution to create their DR plan.

"This research confirms that ransomware is one of the biggest concerns for IT managers today," said Tim Page, CEO of Datrium. "This threat is significantly driving people to reevaluate their DR plans. It's no surprise that more than 88% of respondents said they’d use the public cloud for their DR site if they could pay for it only when they need to test or run their DR plans.”

Ransomware is Plaguing the Enterprise Data Centre

As the nucleus of an enterprise, the data centre must be protected from the threat of disaster or deliberate attacks. Half (50.4%) of all organizations surveyed have recently experienced a DR event, with ransomware reported as the leading cause.

Traditional Approaches to DR Put Organizations at Risk

Traditional DR approaches are lacking. Two significant challenges faced by more than half of organizations who experienced a DR event in the past 24 months were the difficulty of both failover to their DR location and failback.

The top three reasons why failback was difficult included difficulty with format conversion, the amount of time required to failback and challenges in understanding changes in the system since failover.

Pay-for-Use DR in the Public Cloud is in High Demand

The industry norm today is to have physical sites for DR, however the industry is shifting toward DR in the public cloud. The vast majority (88.1%) of respondents said they would use the public cloud as their DR site if they would only have to pay for it when they need it. The most common approach to DR according to more than half (52.7%) of respondents is having more than one physical DR site.

"As companies weigh the best defence options against ransomware, they should consider the benefits of using the public cloud for their DR sites. They can counter the high costs of traditional DR solutions and free their IT staff to focus on revenue-generating initiatives. The public cloud offers lower management overhead and costs along with lower risk and higher reliability in testing and executing DR plans," added Page.

Nearly one in four respondents (23%) stated that their organization is not responding to DR events as effectively as it could be. The top three considerations holding organizations back from responding to DR events include: 1) the complexity of DR products and processes, 2) high associated costs and 3) lack of staff skilled in managing DR.

Growing Threat of Disaster Increases DR Budgets

Given the growing threat of disasters, DR budgets are significantly increasing.

“It's our mission to bring enterprise-grade DR to every IT team by cutting cost and complexity with an on-demand failproof model leveraging VMware Cloud on AWS,” said Page. “Today, Datrium also announced new Disaster Recovery as a Service capabilities that provide VMware users, both on premises and in the cloud, a reliable, cost-effective cloud-based disaster recovery solution with the industry’s first instant Recovery Time Objective (RTO) restarts.”

Research from Ensono and Wipro, conducted by Forrester, has found that 50 per cent of enterprise organisations are expanding or upgrading mainframe versus 69 per cent who are expanding or upgrading implementation of cloud. The research shows that 88 per cent of organisations are adopting a hybrid IT approach and 89 per cent state that adoption includes a hybrid cloud strategy.

Research from Ensono and Wipro, conducted by Forrester, has found that 50 per cent of enterprise organisations are expanding or upgrading mainframe versus69 per cent who are expanding or upgrading implementation of cloud. The research shows that 88 per cent of organisations are adopting a hybrid IT approach and 89 per cent state that adoption includes a hybrid cloud strategy.

Such is the focus on hybrid cloud uptake that one in four enterprise businesses cite internal pressures as a key motivator to exploring new cloud technologies.

Of the enterprise organisations still relying on mainframe, more than a quarter (28 per cent) are refactoring a portion of their apps to take advantage of new cloud technologies such as serverless (74 per cent) and containers (90 per cent).

However, the research also highlighted that companies are not as likely to migrate finance and accounting workloads, preferring to migrate (refactor) only a portion of the workload. According to the respondents, the barriers to cloud migration include substantial costs, potential business disruption during migration, and security and compliance concerns. Refactoring enables organisations to move parts of workloads to the cloud, whatever the most appropriate one that may be.

The high pace of expansion taking place in the global healthcare industry and adoption of advanced technologies is boosting the market for healthcare AR VR devices. The healthcare industry has shown increasing adoption of AR VR technologies and incorporated these in routine operations.

Global Healthcare AR VR Market: Snapshot

Virtual reality enables a person to experience and interact with a 3D environment. Whereas augmented reality provides digital information in the form of sound, audio and graphics.

These technologies are in much demand in hospitals and clinics for medical trainings, research processes, and in diagnostic centers. The market is expected to grow exponentially given the growing preference of people towards advanced technologies. The healthcare industry is also evolving to become an advanced sector, providing better services to treat severe health issues and to provide advanced health related assistance. The incorporation of advanced technologies is also a result of growing competition among market companies. Top companies are vying with one another to introduce the latest technologies before competition, which is intended to help them stay ahead in the race. According to the report forecasts, the global healthcare AR VR market is expected to hold a market value of over US$ 600 Mn in 2018, which may reach a market value of over US$ 15,000 Mn by the end of 2026. The market is anticipated to grow at an exceptionally high CAGR of 49.1% during the forecast period 2018 – 2026.

Healthcare AR VR Market: Competitive Landscape

There are a number of companies outshining in the global market. Most of these companies are ahead in making huge investments and entering into acquisitions and mergers. This research report combines a brief profile of all such leading companies and their strategic planning for the coming years. Some these key players included in the report are Samsung Electronics Co. Ltd, Google Inc., DAQRI LLC, Oculus VR, LLC, Magic Leap, Inc., ImmersiveTouch, Inc., FIRSTHAND TECHNOLOGY INC., HTC Corporation etc.

The research report highlights the forecast for the healthcare AR VR market based on key factors impacting the market and also the trends that are prevailing in the market. There has been a growth in the adoption of smartphones among individuals due to the increasing usage of digital technologies in healthcare AR VR such as surgery planning, medical treatment and others. This has increased revenue contribution to GDP especially in developing regions such as Africa, APAC, and MEA. North America is expected to hold the highest revenue share of over US$ 5,000 Mn by the end of 2026 in the global healthcare AR VR market. Furthermore, SEA and other APAC regions are also expected to be the most lucrative regions for companies looking for emerging opportunities in the market.

However, it is yet to be seen whether the developing regions will be capable enough to hold a decent share of the healthcare AR VR market in the coming years.

But IT should beware of overcoming AI-wash only to fall victim to ML-sprawl

Bryan Betts, Freeform Dynamics Ltd.

We hear and read a lot about AI and machine learning (ML) these days, but outside a few core applications, such as autonomous vehicles or decision-support systems, how much use is it really seeing? How well is it doing in industry, for example? After all, the huge volumes of data generated within the modern factory, or by connected products and devices, would seem like a natural fit for ML’s data-sifting capabilities.

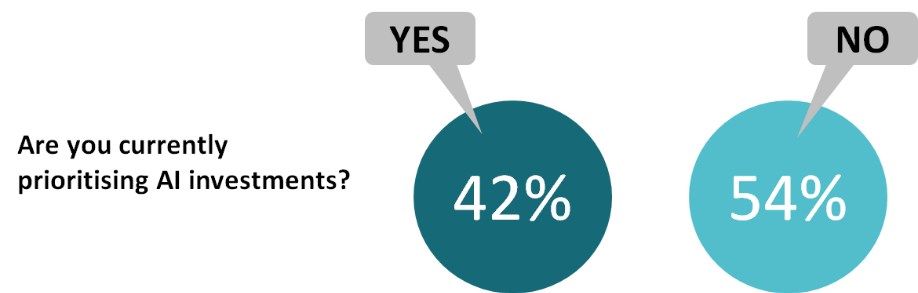

Yet in a recent study of AI plans and perceptions carried out by Freeform Dynamics, just over half of the IT professionals surveyed said their organisations were not currently prioritising AI investments (Figure 1). The reasons most commonly cited for this were excess hype and concerns over AI’s relevance and readiness.

The perceptual part of the problem is made worse by the tendency of some marketing people to indulge in ‘AI-washing’. They label almost anything automated as AI or AI-enabled, even when it simply has a few rules embedded in it – and of course when ML is just one component of what might one day turn into artificial intelligence.

Indeed, when most people refer to AI they are actually referring to ML, or perhaps to deep learning (DL), an ML subset that’s especially fashionable at present. Sometimes they excuse the conflation by labelling the thinking machines of the future as “general AI” and ML as “narrow AI”, although in some ways that’s just a differently-coloured coat of AI-wash.

This is gradually changing, with the term AI becoming more acceptable in certain usages. An example is virtual assistants or intelligent agents, especially where the AI is also able to act autonomously – remedying an insecure network port or S3 bucket, say. The key factor seems to be that these assistants combine multiple AI subsets, such as ML plus natural language processing and automated planning.

Making ML measurable

In any case, there is more to AI resistance than just an allergy to hype. Our study also revealed concerns around how to measure return on investment, how to scope, specify and cost the AI platform, and of course the availability of the necessary skills.

In addition, AI/ML is very much a multi-disciplinary matter, with dependencies that go way beyond IT. It’s essential therefore to also have business users, operational staff and other engineering disciplines involved right from the very start, from evaluation, budgeting and planning, through to implementation and ongoing operations.

Yet most of our survey respondents said it was a challenge to get all the relevant stakeholders and disciplines or departments working together. Just 11% of those with experience of AI initiatives said they had the full involvement of those required, while 37% said their stakeholders were “not at all” working together effectively.

The one area in our study where it flipped around to AI acceptance – to some degree, at least – was in manufacturing, where a slim majority of respondents (53%) said they were already prioritising AI investment. If you include those who agreed that they ought to be prioritising AI investment, as well as those already doing so, the totals rise from 60% for all sectors to 72% in manufacturing alone.

One of the biggest areas for AI/ML today is intelligent automation. This is often presented as a necessity: think about areas such as network security, or predictive maintenance in a factory, for instance. There is simply too much data now, too many alerts coming in, for a human to process it all. In applications such as these, ML can not only act as a filter but it can also take action, assuming that action would also be automatic on the human’s part.

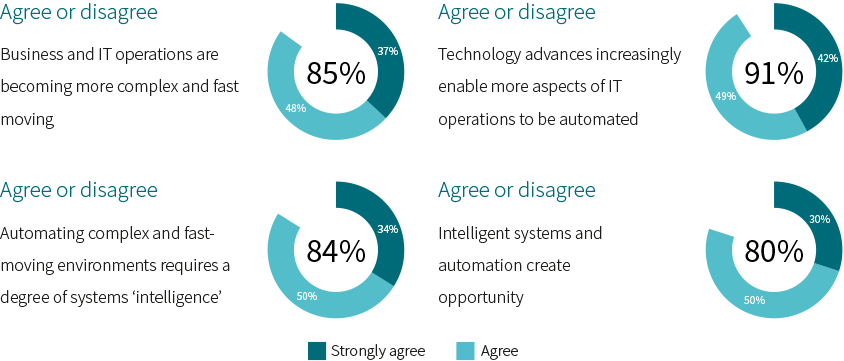

One thing our research into AI has shown though is that this only tells part of the story. For a start, the technology we want (or need) to automate must actually be capable of automation. It is something of a self-reinforcing loop – the application of technology to a process also enables it to be automated, and as the process’s speed and complexity ramp up, that automation becomes pretty much essential (Figure 2).

AI as an agent of change

And then there is automation and ML not just as ways to keep up with an ever-faster world, but as creators of opportunity. By automating the routine, we should – in theory, at least – free up human and mental resources for new tasks. Certainly, past industrial revolutions have subsequently driven innovations and changes in culture and society that went far beyond their initial industrial impact.

It remains to be seen if intelligent automation will indeed amount to a new industrial revolution, as some believe it will. Even if it does, will it be as disruptive and all-embracing as the changes brought by steam power and mass production? For instance, the shopper of today is used to clothing and fashion being largely disposable. Yet in pre-industrial times, the effort required to grow fibres on a sheep or plant, then manually spin, weave, sew and dye them, meant that your clothing could be the most valuable thing you owned.

It is quite possible that we are in the position of our medieval ancestors, trying to imagine a world in which a garment, far from being something that you leave in your will, costs the same as a pint of beer – and can be discarded as readily. That means we need to plan for a future that we cannot see – not just for the things that we know that we need more information on (the known-unknowns), but for the “unknown unknowns”.

For example, we know that while previous industrial revolutions destroyed some jobs, they created others. But can we assume that will always be true, and even if it is, will the new jobs be of equal value, status and skill – will master weavers once again be replaced by mere machine-minders?

Taking advantage of AI today

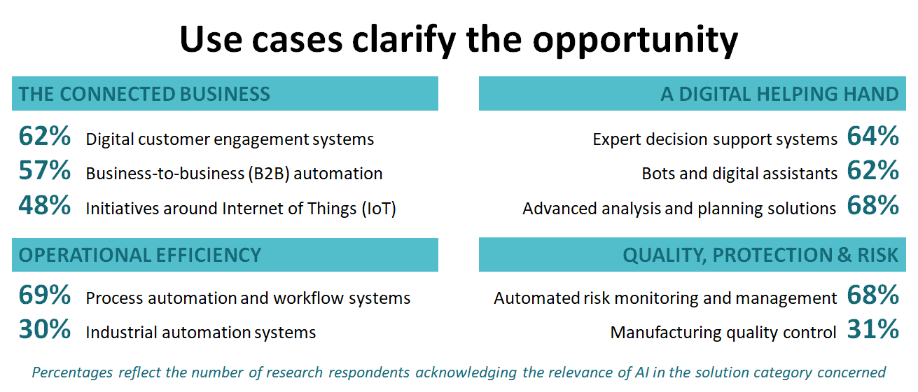

Whatever changes may follow in the future, our survey respondents clearly see opportunities in using AI today, to help both staff and customers, and to improve operational efficiency (Figure 3).

Yes, there is the problem of simply knowing how and where to start, and then what to expect. And yes, there is the challenge of avoiding AI bias, which can creep in in very simple ways – for example by using training data that reflects the current state of affairs when that itself is biased.

AI tools and technologies are increasingly available in packaged forms, however, and it’s useful to remember that they are just tools and technologies. For any technology to win adoption it has to be trustworthy: people don’t need to understand how it works, but it needs to be explainable, fair and accessible. AI is no different.

The one caveat is to starting thinking now about strategy and architecture – if you’re not already doing so. Something we’ve seen in our research time and again is that although the barrier to acceptance is high, once a new technology has proved itself in one application it tends to find others pretty fast. So unless you get reusable processes and platforms in place first, you could end up in a disjointed landscape of incompatible silos and stacks. We’ve already had VM-sprawl and cloud-sprawl – let’s not have ML-sprawl next!

IT spending in EMEA will return to growth at $798 billion in 2020, an increase of 3.4% from 2019, according to the latest forecast by Gartner, Inc.

“2020 will be a recovery year for IT spending in EMEA after three consecutive years of decline,” said John Lovelock, research vice president at Gartner. “This year declines in the Euro and the British Pound against the U.S. Dollar, at least partially due to Brexit concerns, pushed some IT spending down and caused a rise in local prices for technology hardware. However, 2020 will be a rebound year as Brexit is expected to be resolved and the pressure on currency rates relieved.”

Spending on Devices Still Down While Enterprise Software Spending Keeps Rising

In 2019, EMEA spending on devices (including PCs, tablets and mobile phones) is set to decline 10.7% (see Table 1). Higher prices — partly due to currency declines and a lack of new “must have” features in mobile phones — have allowed consumers to defer upgrades for another year. Devices spending will not rebound in 2020, but instead fall by 1.3%, as both businesses and consumers move away from spending on PCs and tablets.

After 2019, the communications services segment will achieve long-term growth despite fixed-line services in both the consumer and business spaces declining every year through 2023. While mobile voice spending is flat — mainly due to price declines — mobile data spending increases 3% to 4% per year, which is keeping the overall communications services market growing in 2020.

Enterprise software will remain the fastest-growing market segment in 2020. EMEA spending for enterprise software will increase 3.4% and 9.2% in 2019 and 2020, respectively. Software as a service (SaaS) will achieve 14.1% growth in 2019 and 17.7% in 2020.

Table 1. EMEA IT Spending Forecast (Millions of U.S. Dollars)

|

| 2019 Spending | 2019 Growth (%) | 2020 Spending | 2020 Growth (%) |

| Data Center Systems | 42,052 | -3.8 | 42,949 | 2.1 |

| Enterprise Software | 109,351 | 3.4 | 119,377 | 9.2 |

| Devices | 116,346 | -10.7 | 114,833 | -1.3 |

| IT Services | 282,885 | 0.4 | 297,985 | 5.3 |

| Communications Services | 221,523 | -6.3 | 223,102 | 0.7 |

| Overall IT | 772,156 | -3.2 | 798,246 | 3.4 |

Source: Gartner (November 2019)

Regulatory Compliance Fuels Spending

The complex geopolitical environment across EMEA has pushed regulatory compliance to the top of the priority list for many organizations. EMEA spending on security will grow 9.3% in 2019 and rise by 8.9% in 2020.

“Globally, security spending is increasing and being driven by the need to be compliant with tariffs, trade policies and intellectual property rights. In EMEA, privacy and compliance concerns, further driven by GDPR, take precedence,” said Mr. Lovelock.

The U.S. is leading cloud adoption and accounts for over half of global spending on cloud, which will total $140.4 billion in 2020, up 15.5% from 2019. In terms of cloud spending, the U.K. holds the No. 2 position behind the U.S. The U.K. spends 8% of IT spending on public cloud services, which will total $US 16.6 billion in 2020, up 13.2% from 2019. In EMEA, the overall spending on public cloud services will reach $57.7 billion in 2020, up from $50 billion in 2019.

Gartner predicts that organizations with a high percentage of IT spending dedicated to the cloud will become the recognized digital leaders in the future.

“Organizations in Europe, regardless of industry, are shifting their balance from traditional to digital — moving toward “techquilibrium,” a technological balancing point that defines how digital an enterprise needs to be to compete or lead,” said Mr. Lovelock. “Not every company needs to be digital in the same way or to the same extent. This move towards rebalancing the traditional and digital is clearly visible amongst EMEA companies."

Even as digitalization is becoming pervasive among EMEA organizations, these organizations remain vulnerable to “turns,” forces that create uncertainty and pressure for their CIOs. Ninety percent of EMEA organizations have faced a turn in the last four years, according to Gartner Inc.’s annual global survey of CIOs. With 2020 likely to be more volatile than 2019, CIOs must help their organizations acquire the capabilities needed to win when the next turn arrives.

“Simply being digital isn’t really going to cut it anymore. Forty-one percent of EMEA CIOs are already running mature digital businesses, up from 35% last year. It’s the coming turns that are the problem, not digitalization,” said Andy Rowsell-Jones, vice president and distinguished analyst at Gartner. “No one is immune from economic, geopolitical, technical or societal turns, which are likely to be more common in 2020 and beyond. These turns can take different forms and can disrupt an organization’s abilities in many ways.”

The 2020 Gartner CIO Agenda Survey gathered data from more than 1,000 CIO respondents, including 383 in 40 EMEA countries, and all major industries. The EMEA sample represents nearly $1.7 trillion in revenue and $23 billion in IT spending.

Turns Weaken an Organization’s Ability to Build Fitness

The nature and severity of the turns reported by CIOs varied widely. In EMEA, they included adverse regulatory intervention (41% of CIO respondents), organizational disruption (40%) and severe operating cost pressure (40%). “Those and other severe scenarios such as IT service failure or natural disaster have affected how organizations do business, and in most cases are making new things harder,” said Mr. Rowsell-Jones. “The real trick is how well organizations weathered these challenges.”

Mr. Rowsell-Jones added that the problem with a turn is that, once you’re in one, it disrupts your ability to respond. Thirty-six percent of EMEA CIOs reported that turns had handicapped them in bringing new business initiatives to market, pushing out time to value and ultimately reduced their success.

Turns also weaken an organization’s ability to build its “fitness” muscles. Thirty-four percent of EMEA CIOs said they were behind in the race to attract the right talent, 30% were suffering slowed or negative IT budget growth, and 32% said funding for new business initiatives had dried up. “No matter what it is, prepare for a turn before you attempt to go around it,” said Mr. Rowsell-Jones.

Fit Organizations Emerge Stronger From Turns Than Fragile Organizations

In the global survey, Gartner separated CIO respondents’ organizations that had suffered a severe turn into two groups — “fit” and “fragile” — based on business outcomes. Gartner looked at over 50 organizational performance attributes to determine what differentiated the two groups.

Results were bundled into three themes — alignment, anticipation and adaptability — which indicated simple shifts in leadership priorities. For example, in times of crisis, leaders of fit organizations ensure that the organization stays together while it shifts to a new direction. They actively search for emerging trends or situations that require change. They take calculated risks but trust the core business capabilities. Leaders in fragile businesses are measurably worse at these things.

The differences between fit and fragile organizations do not end with leadership attitudes. Internal institutions and process matter too. For example, disciplined IT investment decisions and having a clear strategy were cited as key areas of alignment by fit organizations, but much less so by fragile organizations. The survey found that 53% of global fit organizations are likely to have a flexible IT funding model to respond to changes, compared to 43% in EMEA. In addition, having a clear and consistent overall business strategy ranks as one of the most distinctive traits of fit organizations. Sixty-seven percent of the global fit CIOs said their organization excels at this; so, too, did 63% of EMEA CIOs.

A robust relationship with the CEO is also a strong differentiator. A strong CEO relationship helps CIOs learn about a change in business strategy as it occurs. In EMEA, 43% of CIOs are reporting directly to their CEOs. This demonstrates that EMEA CIOs are aligning their purpose and direction with business executives.

EMEA CIOs Are Prioritizing Cybersecurity, RPA and AI for 2020

Fit IT leaders use IT to gain competitive advantage and help organizations anticipate changes ahead of time. Similar to the global fit CIOs, a large percentage of EMEA CIOs have already deployed or will deploy cybersecurity, robotic process automation (RPA) and artificial intelligence (AI) in the next 12 months.

Adaptability is the IT organization’s chief responsibility. “In a fit organization, IT leaders turn the IT organization into an instrument of change,” said Mr. Rowsell-Jones. “Sixty percent of global fit organizations rate the clarity and effectiveness of IT governance very highly. Similarly, 51% of EMEA CIOs rank their organization’s IT governance as effective or highly effective. IT governance can coordinate resource allocation in times of disruption, which offers survival benefits to an IT organization.”

“Digital is no longer the road to competitive advantage,” said Mr. Rowsell-Jones. “How organizations flex as the environment changes and how CIOs organize to deal with turns will dictate their success in the future, especially in 2020.”

Worldwide Public Cloud revenue to grow 17% in 2020

The worldwide public cloud services market is forecast to grow 17% in 2020 to total $266.4 billion, up from $227.8 billion in 2019, according to Gartner, Inc.

“At this point, cloud adoption is mainstream,” said Sid Nag, research vice president at Gartner. “The expectations of the outcomes associated with cloud investments therefore are also higher. Adoption of next-generation solutions are almost always ‘cloud-enhanced’ solutions, meaning they build on the strengths of a cloud platform to deliver digital business capabilities.”

Software as a service (SaaS) will remain the largest market segment, which is forecast to grow to $116 billion next year due to the scalability of subscription-based software (see Table 1). The second-largest market segment is cloud system infrastructure services, or infrastructure as a service (IaaS), which will reach $50 billion in 2020. IaaS is forecast to grow 24% year over year, which is the highest growth rate across all market segments. This growth is attributed to the demands of modern applications and workloads, which require infrastructure that traditional data centers cannot meet.

Table 1. Worldwide Public Cloud Service Revenue Forecast (Billions of U.S. Dollars)

|

| 2018 | 2019 | 2020 | 2021 | 2022 |

| Cloud Business Process Services (BPaaS) | 41.7 | 43.7 | 46.9 | 50.2 | 53.8 |

| Cloud Application Infrastructure Services (PaaS) | 26.4 | 32.2 | 39.7 | 48.3 | 58.0 |

| Cloud Application Services (SaaS) | 85.7 | 99.5 | 116.0 | 133.0 | 151.1 |

| Cloud Management and Security Services | 10.5 | 12.0 | 13.8 | 15.7 | 17.6 |

| Cloud System Infrastructure Services (IaaS) | 32.4 | 40.3 | 50.0 | 61.3 | 74.1 |

| Total Market | 196.7 | 227.8 | 266.4 | 308.5 | 354.6 |

BPaaS = business process as a service; IaaS = infrastructure as a service; PaaS = platform as a service; SaaS = software as a service

Note: Totals may not add up due to rounding.

Source: Gartner (November 2019)

Various forms of cloud computing are among the top three areas where most global CIOs will increase their investment next year, according to Gartner. As organizations increase their reliance on cloud technologies, IT teams are rushing to embrace cloud-built applications and relocate existing digital assets. “Building, implementing and maturing cloud strategies will continue to be a top priority for years to come,” said Mr. Nag.

“The cloud managed service landscape is becoming increasingly sophisticated and competitive. In fact, by 2022, up to 60% of organizations will use an external service provider’s cloud managed service offering, which is double the percentage of organizations from 2018,” said Mr. Nag. “Cloud-native capabilities, application services, multicloud and hybrid cloud comprise a diverse cloud ecosystem that will be important differentiators for technology product managers. Demand for strategic cloud service outcomes signals an organizational shift toward digital business outcomes.”

More than 740,000 autonomous-ready vehicles to be added to global market

By 2023, worldwide net additions of vehicles equipped with hardware that could enable autonomous driving without human supervision will reach 745,705 units, up from 137,129 units in 2018, according to Gartner, Inc. In 2019, net additions will be 332,932 units. This growth will predominantly come from North America, Greater China and Western Europe, as countries in these regions become the first to introduce regulations around autonomous driving technology.

Net additions represent the annual increase in the number of vehicles equipped with hardware for autonomous driving. They do not represent sales of physical units, but rather demonstrate the net change in vehicles that are autonomous-ready.

“There are no advanced autonomous vehicles outside of the research and development stage operating on the world’s roads now,” said Jonathan Davenport, principal research analyst at Gartner. “There are currently vehicles with limited autonomous capabilities, yet they still rely on the supervision of a human driver. However, many of these vehicles have hardware, including cameras, radar, and in some cases, lidar sensors, that could support full autonomy. With an over-the-air software update, these vehicles could begin to operate at higher levels of autonomy, which is why we classify them as ‘autonomous-ready.’”

While the growth forecast for autonomous-driving-capable vehicles is fast, net additions of autonomous commercial vehicles remain low in absolute terms when compared with equivalent consumer autonomous vehicle sales. The number of vehicles equipped with hardware that could enable autonomous driving without human supervision in the consumer segment are expected to reach 325,682 in 2020, while the commercial segment will see just 10,590 (see Table 1).

Table 1: Autonomous-Ready Vehicles Net Additions, 2018-2023

| Use Case | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 |

| Commercial | 2,407 | 7,250 | 10,590 | 16,958 | 26,099 | 37,361 |

| Consumer | 134,722 | 325,682 | 380,072 | 491,664 | 612,486 | 708,344 |

| Total | 137,129 | 332,932 | 390,662 | 508,622 | 638,585 | 745,705 |

Source: Gartner (November 2019)

Lack of Regulation Inhibiting Autonomous Vehicle Deployment

Today, there are no countries with active regulations that allow production-ready autonomous vehicles to operate legally, which is a major roadblock to their development and use.

“Companies won’t deploy autonomous vehicles until it is clear they can operate legally without human supervision, as the automakers are liable for the vehicle’s actions during autonomous operation,” said Mr. Davenport. “As we see more standardized regulations around the use of autonomous vehicles, production and deployment will rapidly increase, although it may be a number of years before that occurs.”

Sensor Hardware Costs a Limiting Factor

By 2026, the cost of the sensors that are needed to deliver autonomous driving functionality will be approximately 25% lower than they will be in 2020. Even with such a decline, these sensor arrays will still have prohibitively high costs. This means that through the next decade, advanced autonomous functionality will be available only on premium vehicles and vehicles sold to mobility service fleets.

“Research and development robo-taxis with advanced self-driving capabilities cost as much as $300,000 to $400,000 each,” said Mr. Davenport. “Sophisticated lidar devices, which are a type of sensor needed for these advanced autonomous vehicles, can cost upward of $75,000 per unit, which is more than double the price of your average consumer automobile. This puts higher-level autonomous vehicle technology out of reach for the mainstream market, at least for now.”

Public Perceptions of Safety Will Determine Growth

Vehicle-human handover safety concerns are a substantial impediment to the widespread adoption of autonomous vehicles. Currently, autonomous vehicle perception algorithms are still slightly less capable than human drivers.

“A massive amount of investment has been made into the development of autonomous vehicle perception systems, with more than 50 companies racing to develop a system that is considered safe enough for commercial use,” said Mr. Davenport. Gartner predicts that it will take until 2025 before these systems demonstrate capabilities that are an order of magnitude better than human drivers.

To accelerate this innovation, technology companies are using simulation software powered by artificial intelligence to understand how vehicles would handle different situations. This enables companies to generate thousands of miles of vehicle test data in hours, which would take weeks to obtain through physical test driving.

“One of the biggest challenges ahead for the industry will be to determine when autonomous vehicles are safe enough for road use,” said Michael Ramsey, senior director analyst at Gartner. “It’s difficult to create safety tests that capture the responses of vehicles in an exhaustive range of circumstances. It won’t be enough for an autonomous vehicle to be just slightly better at driving than a human. From a psychological perspective, these vehicles will need to have substantially fewer accidents in order to be trusted.”

By 2023, digital transformation spending will grow to more than 50% of all ICT investment from 36% today; largest growth in data intelligence and analytics.

International Data Corporation (IDC) has unveiled IDC FutureScape: Worldwide Digital Transformation 2020 Predictions. In this year's DX predictions, IDC highlights the critical business drivers accelerating DX initiatives and investments as companies seek to effectively navigate business challenges, compete at hyperscale, and meet rising customer expectations.

In an IDC FutureScape Web conference held today at 12:00 pm U.S. Eastern time, IDC analysts Bob Parker and Shawn Fitzgerald discussed the ten industry predictions that will impact digital transformation efforts of CIOs and IT professionals over the next one to five years and offered guidance for managing the implications these predictions harbor for their IT investment priorities and implementation strategies. To register for an on-demand replay of this Web conference or any of the IDC FutureScape Web conferences, please visit https://www.idc.com/idcfuturescape2020.

The predictions from the IDC FutureScape for Worldwide Digital Transformation are:

Prediction 1 – Future of Culture: By 2024, leaders in 50% of G2000 organizations will have mastered "future of culture" traits such as empathy, empowerment, innovation, and customer-and data-centricity to achieve leadership at scale.

Prediction 2 – Digital Co-Innovation: By 2022, empathy among brands and for customers will drive ecosystem collaboration and co-innovation among partners and competitors that will drive 20% collective growth in customer lifetime value.

Prediction 3 – AI at Scale: By 2024, with proactive, hyperspeed operational changes and market reactions, artificial intelligence (AI)-powered enterprises will respond to customers, competitors, regulators, and partners 50% faster than their peers.

Prediction 4 – Digital Offerings: By 2023, 50% of organizations will neglect investing in market-driven operations and will lose market share to existing competitors that made the investments, as well as to new digital market entries.

Prediction 5 – Digitally Enhanced Workers: By 2021, new future of work (FoW) practices will expand the functionality and effectiveness of the digital workforce by 35%, fueling an acceleration of productivity and innovation at practicing organizations.

Prediction 6 – Digital Investments: By 2023, DX spending will grow to over 50% of all ICT investment from 36% today, with the largest growth in data intelligence and analytics as companies create information-based competitive advantages.

Prediction 7 – Ecosystem Force Multipliers: By 2025, 80% of digital leaders will devise and differentiate end-customer value measures from their platform ecosystem participation, including an estimate of the ecosystem multiplier effects.

Prediction 8 – Digital KPIs Mature: By 2020, 60% of companies will have aligned digital KPIs to direct business value measures of revenue and profitability, eliminating today's measurement crisis where DX KPIs are not directly aligned.

Prediction 9 – Platforms Modernize: Driven both by escalating cyberthreats and needed new functionality, 65% of organizations will aggressively modernize legacy systems with extensive new technology platform investments through 2023.

Prediction 10 – Invest for Insight: By 2023, enterprises seeking to monetize benefits of new intelligence technologies will invest over $265 billion worldwide, making DX business decision analytics and AI a nexus for digital innovation.

According to Shawn Fitzgerald, research director, Worldwide Digital Transformation Strategies, "Now in its fourth annual installment, our digital transformation predictions mark the next set of inflection points and related consequences executives should evaluate for inclusion into their multi-year planning scenarios. Direct digital transformation (DX) investment is growing at 17.5% CAGR and expected to approach $7.4 trillion over the years 2020 to 2023 as companies build on existing strategies and investments; becoming digital-at-scale future enterprises. Organizations with new digital business models at their core are well positioned to successfully compete in the digital platform economy."

Worldwide spending on AR/VR to reach $18.8 billion in 2020

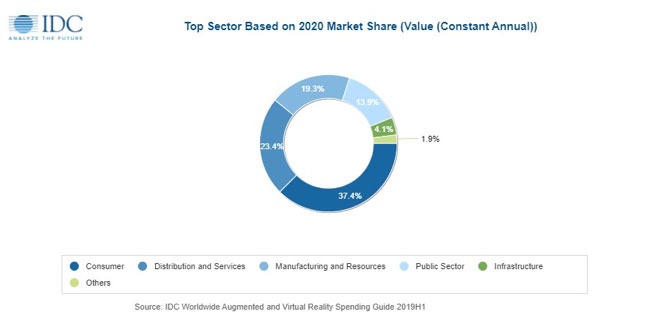

Worldwide spending on augmented reality and virtual reality (AR/VR) is forecast to be $18.8 billion in 2020, an increase of 78.5% over the $10.5 billion International Data Corporation (IDC) expects will be spent in 2019. The latest update to IDC's Worldwide Augmented and Virtual Reality Spending Guide also shows that worldwide spending on AR/VR products and services will continue this strong growth throughout the 2019-2023 forecast period, achieving a five-year compound annual growth rate (CAGR) of 77.0%.

Worldwide spending on AR/VR solutions will be led by the commercial sectors, which will see its combined share of overall spending grow from less than 50% in 2020 to 68.8% in 2023. The commercial industries that are expected to spend the most on AR/VR in 2020 are retail ($1.5 billion) and discrete manufacturing ($1.4 billion). Fifteen industries are forecast to deliver CAGRs of more than 100% over the five-year forecast period, led by securities and investment services (181.4% CAGR) and banking (151.9% CAGR). Consumer spending on AR/VR will be greater than any single enterprise industry ($7.0 billion in 2020) but will grow at a much slower pace (39.5% CAGR). Public sector spending will maintain a fairly steady share of overall spending throughout the forecast.

"AR/VR commercial uptake will continue to expand as cost of entry declines and benefits from full deployment become more tangible. Focus is shifting from talking about technology benefits to showing real and measurable business outcomes, including productivity and efficiency gains, knowledge transfer, employee's safety, and more engaging customer experiences," said Giulia Carosella, research analyst, European Industry Solutions, Customer Insights & Analysis.

Commercial use cases will account for nearly half of all AR/VR spending in 2020, led by training ($2.6 billion) and industrial maintenance ($914 million) use cases. Consumer spending will be led by two large use cases: VR games ($3.3 billion) and VR feature viewing ($1.4 billion). However, consumer spending will only account for a little over one third of all AR/VR spending in 2020 with public sector use cases making up the balance. The AR/VR use cases that are forecast to see the fastest growth in spending over the 2019-2023 forecast period are lab and field (post secondary) (190.1% CAGR), lab and field (K-12) (168.7% CAGR), and onsite assembly and safety (129.5% CAGR). Seven other use cases will also have five-year CAGRs greater the 100%. Training, with a 61.8% CAGR, is forecast to become the largest use case in terms of spending in 2023.

Hardware will account for nearly two thirds of all AR/VR spending throughout the forecast, followed by software and services. Services spending will see strong CAGRs for systems integration (113.4%), consulting services (99.9%), and custom application development (96.1%) while software spending will have a 78.2% CAGR.

"Across enterprise industries, we are seeing a strong outlook for standalone viewers play out in use case adoption. Enterprises will drive much of these high-end headset adoption trends. In the consumer segment, more affordable viewer models for gaming and entertainment purposes will see the broadest industry adoption," said Marcus Torchia, research director, Customer Insights & Analysis.

Of the two reality types, spending in VR solutions will be greater than that for AR solutions initially. However, strong growth in AR hardware, software, and services spending (164.9% CAGR) will push overall AR spending well ahead of VR spending by the end of the forecast.

On a geographic basis, China will deliver the largest AR/VR spending total in 2020 ($5.8 billion), followed by the United States ($5.1 billion). Western Europe ($3.3 billion) and Japan ($1.8 billion) and will be the next two largest regions in 2020, but Western Europe will move ahead of China into the second position by 2023. The regions that will see the fastest growth in AR/VR spending over the forecast period are Western Europe (104.2% CAGR) and the United States (96.1% CAGR).

IDC's Managed CloudView 2019, a global primary research-based study sampling 1,500 buyers and nonbuyers of managed cloud services, highlights how enterprise expectations of managed service providers (SPs), along with their ecosystem of public cloud provider partners, is shifting and will drive fundamental changes in both how buyers consume cloud services and providers position their business models in meeting customer needs for these services.

"Enterprises continue to see tremendous value in utilizing managed SPs for managed cloud services to support transformation to cloud and provide multicloud management capabilities that helps to orchestrate and manage across a broad array of hyperscalers and SaaS provider partners, the full range of cloud options (private, public, hybrid), and across the lifecycle of services, while supporting new innovations, critical business processes and industry requirements," said David Tapper, vice president, Outsourcing and Managed Cloud Services at IDC. "However, a combination of changing customer perceptions and expectations, technological innovation, and pressures emerging from coopetition between managed SPs and their ecosystem partners of hyperscalers appear to be creating a tipping point for which managed SPs need to clearly assess their market position and what their long-term roles will be in optimizing their opportunities for managed cloud services."

Key findings from IDC's worldwide Managed CloudView 2019 study include the following: